Secure your place at the Digiday Publishing Summit in Vail, March 23-25

Future of TV Briefing: The challenges in using generative AI tools for video productions

This Future of TV Briefing covers the latest in streaming and TV for Digiday+ members and is distributed over email every Wednesday at 10 a.m. ET. More from the series →

This week’s Future of TV Briefing looks at the limitations video producers and VFX specialists encounter when using generative AI tools.

- Instable diffusion

- Upfront with BET’s Louis Carr

- TV watch time slips some more

- NBCUniversal’s & Group Black’s streaming ad deal, LinkedIn’s streaming ad ambitions, Twitch’s latest star defection and more

Instable diffusion

The key hits:

- Image-generating AI tools present a challenge in achieving consistent video aesthetics.

- Including consistent keywords and archetypes can help to force some uniformity in the image outputs.

- The lack of control makes it hard to use generative AI tools for professional productions.

Generative artificial intelligence technology is already proving to come in pretty handy on video productions, as covered in last week’s briefing. Nonetheless, tools such as Midjourney, Stable Diffusion and D-ID still require video producers and visual effects specialists to take a hands-on approach when navigating around the tools’ shortcomings.

“It’s all about R&D. You need to understand the limitations,” said Ryan Knowles, head of 2D and VFX supervisor at visual effects studio Electric Theatre Collective, which incorporated Stable Diffusion in producing the visual effects for Coca-Cola’s “Masterpiece” video campaign. “Understand the benefits of using the tool because you wouldn’t be using it if there weren’t benefits. But also understand the limitations and then factor that into how you approach creating final images.”

When it comes to video projects, a primary challenge that generative AI tools present to their human collaborators is achieving a consistent look.

Given that a video is just a series of still images, it makes complete sense that image-generating AI tools like Midjourney and Stable Diffusion — which are designed to churn out still images — could be co-opted to create the frames that make up a video. And as the folks at Electric Theatre Collective, production studio Curious Refuge and AI video ad developer Waymark have shown, that is the case. But in each case, the teams working on the projects had to determine how to ensure the images being spit out resembled one another.

“Character consistency — just continuity in general is a huge challenge,” said Stephen Parker, head of creative at Waymark, a company that develops AI video ad tools for businesses and that produced a 12-minute-long AI-animated short film “The Frost.”

For example, “The Frost” features characters that show up in multiple scenes, meaning they need to look the same or similar enough in each appearance so as to be recognizable. To pull that off, Waymark’s team relied on archetypes that would lend themselves to uniform prompts submitted to OpenAI’s DALL-E 2, which was used to generate the short film’s images.

For a character in the style of a mad scientist, “he’s continually prompted as like ‘a gray-haired, wily-esque scientist in a white lab coat,’” said Parker. “You’re just trying to give DALL-E enough of an archetype to hold onto that you can suspend disbelief as you’re making your way through the cuts. And then you’re generating quite a few images, so you are kind of looking for the same archetype within those generations.”

Similarly, Curious Refuge relied on consistent prompting to get a stable look out of Midjourney for a series of trailers in the style of film director Wes Anderson. “Specifically for the ‘Star Wars’ and ‘Lord of the Rings’ projects, I didn’t have to do much beyond just making sure that my prompts included consistent information,” said Curious Refuge CEO Caleb Ward. “For example, ‘in the style of Wes Anderson,’ ‘editorial quality,’ ‘cinematic still.’ There was about six different prompts that I really applied to every image to art direct it in a certain direction.”

Nonetheless, an image prompt can be repeated ad infinitum but will not result in identical images because the AI tools are designed to generate images, not to recreate them. For as much as a consistent look can be achieved with the help of a human hand guiding the image generation process, there is an element of instability inherent in these tools.

Case in point: Waymark used D-ID’s Creative Reality Studio to animate characters’ facial expressions by uploading clips from “The Frost.” However, at times the tool would change the face from the original shot. “You’re not getting exactly the image that you put into it,” Parker said.

Both Waymark and Curious Refuge largely used the image-generating AI tools to produce single still images that they held on screen for seconds at a time. To avoid these shots looking like a slideshow, they animated parts of the images, such as by editing in a zoom-out effect to create camera movement or using Creative Reality Studio to animate characters’ facial expressions.

This animated-stills approach was a way to work around the fact that, whatever effort is made to produce consistent images, the AI tools will not generate completely consistent images. Those inconsistencies will become readily apparent when flipping between them frame by frame, like paging through a flipbook or viewing a stop-motion animation in which a character’s position is not entirely uniform.

That inherent inconsistency was something Electric Theatre Collective had to keep in mind when using Stable Diffusion to help animate paintings for Coca-Cola’s campaign, said Knowles. “One of the most important parts was the flow, the energetic journey from one artwork into another artwork. If you fly into the [J.M.W.] Turner painting and there’s too much chatter and too much boil because the oil paints are regenerating frame by frame, it breaks that flow [and] takes you out of it.”

For all the effort to account for the generative AI tools’ consistency challenges, though, at some point the human collaborators had to surrender to their AI counterparts’ eccentricities. Waymark’s team eventually gave itself over to the “weird, stilted” movement that the AI-generated images conjured. “After a while it was like stop fighting it, just go with the weirdness of this thing and make the art we can make from that,” Parker said.

The flip side of that, though, is the current lack of control puts a bit of a cap on the generative AI tools’ application for professional projects. As with Parker, Knowles expressed an appreciation for how the inconsistency of the AI-generated images lends them an original aesthetic and how the best AI-generated videos embrace the instability.

“But if you want to go back and fine-tune it, you can’t. And that’s the most challenging part,” Knowles said. That’s the next step: How can these tools be integrated into professional creative arts where you have a brief and you have these visions you want to execute but you need to be able to control your tools to some degree?”

What we’ve heard

“Twitch has had a lot of changes from a leadership perspective, and have constantly tried to change the business model of what it means to be a creator on their platform. And the reality is, they’ve made so many changes and backpedaled a number of times — it’s quite frustrating for streamers.”

— UTA’s Damon Lau on the Digiday Podcast

Upfront with BET’s Louis Carr

This year’s annual TV upfront advertising negotiations may drag on a bit. At least, that’s the experience so far for BET president of media sales Louis Carr.

“I think maybe, as of today, we’ve only received maybe two budgets or something like that,” he said in an interview on June 14. “I don’t think the marketplace overall is in any hurry. On either side, whether it is agencies or media companies, I don’t think anybody is really in a hurry to get this done — and not anybody who thinks they’re going to do well is in a hurry to get it done.”

A slow-rolling upfront — at least compared to the breakneck pace of the past two upfront cycles — had been an expectation among some TV ad buyers and sellers heading into this year’s negotiation. The economic downturn has dampened advertiser spending since last summer, and some industry executives thought a longer upfront cycle could see some recovery and renewed spending.

“It seems like everybody has pumped the brake. I don’t see anybody rushing to do deals,” said Carr.

Deals will be done, though, eventually. From where Carr sits, the market will get much more active in a couple weeks. “A lot of things will move in July, and then there’ll be some mopping up to do in August,” he said.

Not that BET’s sales team is sitting on its hands until then. The Paramount-owned (for now) TV network participates in the upfront separately from its parent company and is pitching BET’s inventory as well as Vh1’s, but is not commingling the properties in deals.

Another property being pitched in this year’s market is a new ad-supported tier to BET’s BET+ streaming service. “We’re selling that as a premium opportunity that has premium content,” Carr said.

As for premium pricing, he wouldn’t divulge the exact CPM BET is seeking for its ad-supported streaming tier — which will feature six minutes of ads per hour of programming — but said the figure is “north of $25.”

Numbers to know

10: Number of years that Allen Media Group has agreed to use VideoAmp as its primary measurement currency under a new deal.

-7.9%: Forecasted percentage decline in video ad revenues in 2023 compared to 2022.

49.9 million: Estimated number of U.S. households that will have pay-TV subscriptions in 2027.

TV watch time slips some more

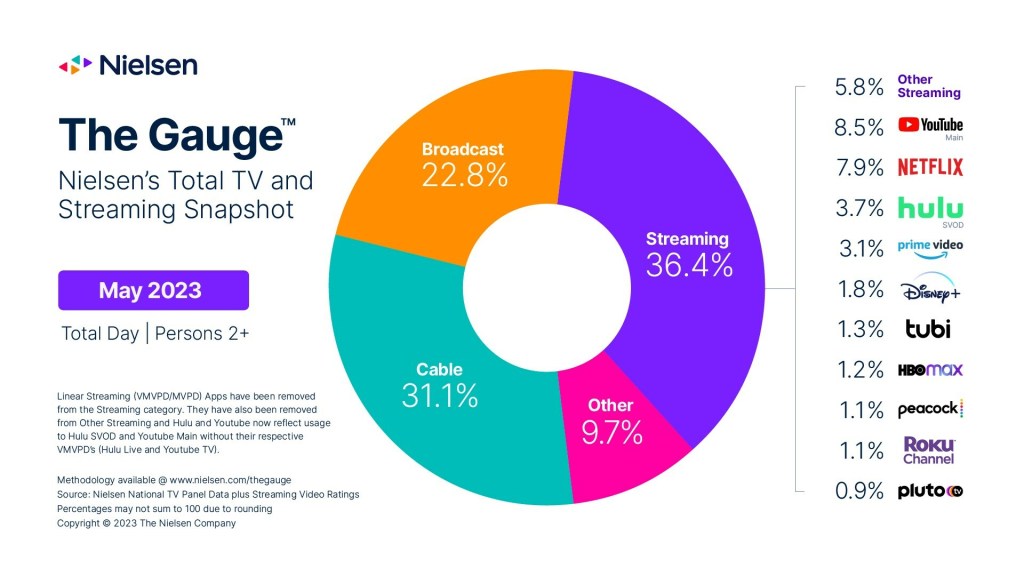

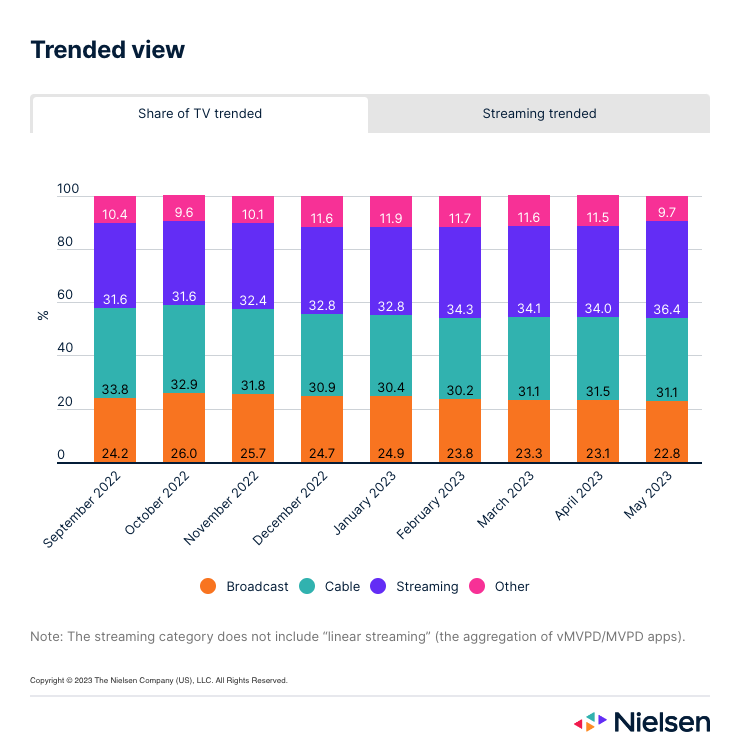

For the fourth straight month, the time people in the U.S. spent sitting in front of their TV screens declined month over month in May, according to Nielsen’s latest The Gauge TV viewership report. However, streaming’s watch time inched up, and Roku’s The Roku Channel became the third free, ad-supported streaming TV service to account for at least 1% of overall TV watch time.

The overall TV watch time picture looks pretty much the same since streaming overtook cable TV in July 2022 to have the top share of watch time among delivery categories. In May, streaming widened its lead a bit, with the amount of time people spent streaming increasing by 2.5% month over month. Overall, people spent 4.4% less time watching their TV screens in May than April and 2.7% less time than in May 2022.

Within the streaming category, Netflix chipped away at YouTube’s watch time lead, which had grown to exceed 1 percentage point in April. Nonetheless, YouTube’s share of watch time still increased by 0.4 percentage points from April to May.

The other notable development on the streaming side was the addition of The Roku Channel, which reached the 1% watch-time share threshold to be broken out by Nielsen. Paramount’s Pluto TV and Fox’s Tubi had been the only two FAST services previously to hit that mark, though Pluto TV has yet to do so since September 2022.

What we’ve covered

As Twitch backpedals rev share policy, UTA’s Damon Lau thinks creators are poised to win:

- Twitch’s leadership changes and rev-share shifts have frustrated streaming creators.

- While esports is struggling, the gaming creator industry is growing, said the talent agent.

Listen to the latest Digiday Podcast here.

RTL and The Trade Desk ink addressable TV ad tie-up:

- The European broadcaster and ad tech firm will enable programmatic ad buying for streaming and traditional TV.

- The programmatically available inventory will span 30 million households.

Read more about addressable TV advertising here.

How PewDiePie’s rebrand shows gaming creators’ growing focus on brand safety:

- The YouTube creator has updated his channel’s logo, among other visual assets.

- The rebrand signals the channel’s — and its corresponding content category’s — attempt to mature.

Read more about PewDiePie here.

Refinery29 launches Twitch’s first third-party live shopping experience:

- The Vice-owned publisher streamed a shoppable show on the Amazon-owned live streaming platform.

- The stream marked the first live shopping stream run by a third-party company.

Read more about Refinery29 here.

Study finds open programmatic CTV ad spend slows for the first time in years:

- Advertisers spent 5% less money on CTV ads bought in the open programmatic market in Q1 2023 compared to Q1 2022.

- The open programmatic market plays a minor role in the CTV ad market.

Read more about the CTV open market here.

What we’re reading

Group Black’s & NBCUniversal’s streaming ad split:

NBCUniversal will let Group Black sell ads in shows on Peacock that are popular among Black audiences, and the companies will share the resulting revenue, according to The Wall Street Journal.

LinkedIn is testing a way to target its users with ads while they’re using streaming services, according to The Information.

This fall Twitch will start letting creators keep 70% of their channels’ subscription revenue, though only up to the initial $100,000 earned, according to The Verge.

Twitch’s latest star defection:

Top Twitch streamer xQc has signed a $100 million deal to stream on rival platform Kick, according to The New York Times.

More in Future of TV

Future of TV Briefing: Netflix’s in-house ad platform launch has led some advertisers to double spend

This week’s Future of TV Briefing looks at how the streamer’s expanded ad targeting and measurement options has resulted in increased advertiser spending.

What’s behind Netflix’s CTV market share jump?

The streamer is set to grab almost 10% of global CTV ad spend. Media buyers say live sports, lower prices and DSP partnerships are making a difference.

Future of TV Briefing: WTF is server-guided ad insertion?

This week’s Future of TV Briefing looks at server-guided ad insertion, a newish method for inserting ads into streaming video on the fly.