Secure your place at the Digiday Publishing Summit in Vail, March 23-25

Journalists are using generative AI tools without company oversight, study finds

Nearly half of journalists surveyed in a new report said they are using generative AI tools not approved or bought by their organization.

That’s according to a survey by Trint, an AI transcription software platform, which asked producers, editors and correspondents from 29 global newsrooms how they plan to use AI for work this year.

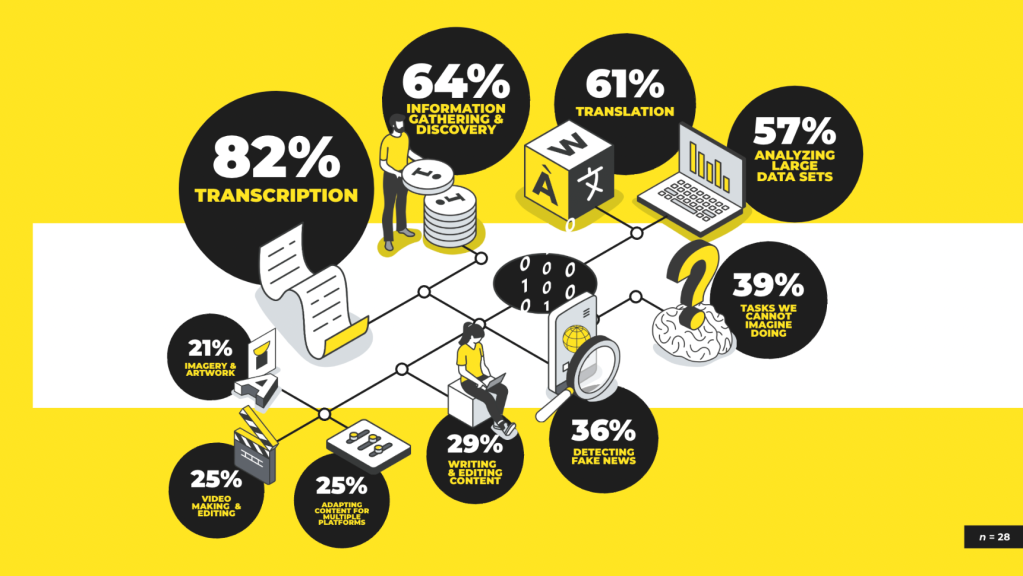

The report found that 42.3% of journalists surveyed are using generative AI tools at work that are not licensed by their company. Journalists said their newsrooms were adopting AI tools to improve efficiency and stay ahead of their competitors, and expected using AI for processes like transcription and translation, information gathering and analyzing large volumes of data to increase in the next few years.

Trint’s survey also found that just 17% of those interviewed found “shadow AI” — or the use of AI tools or apps by employees without company approval — to be a challenge newsrooms face when it comes to deploying generative AI tools. That was far below issues like inaccurate outputs (75%), journalists’ reputational risks (55%) and data privacy concerns (45%).

“Plenty of editorial staff here use AI from time to time, for example to reformat data or as a reference tool,” said a Business Insider employee, who spoke to Digiday on the condition of anonymity. “Some of them do pay for it out of their own pockets.”

Making efficiency gains was the main reason newsrooms were adopting generative AI in 2025, according to Trint’s report, according to 69% of respondents.

But the Business Insider employee said these use cases for generative AI tools fall into a gray area. The guidance from company management has been focused on principles, rather than specific orders on what employees can and can’t do with the technology, they said.

“We encourage everyone at Business Insider to use AI to help us innovate in ways that don’t compromise our values. We also have an enterprise LLM available for all employees to use,” said a Business Insider spokesperson. (Business Insider’s previous editor in chief Nicholas Carlson published a memo in 2023 outlining these newsroom guidelines.)

“They’re not approved [tools], but they’re not disapproved,” the employee said, adding that they have been advised not to enter confidential information into generative AI systems and to be “skeptical of the output.”

A publishing exec — who traded anonymity for candor — said AI technology is evolving so quickly that companies may have a hard time keeping up with their corporate compliance infrastructures, especially when it comes to legal and data security.

“I think the risk of individual staffers using these tools is pretty small … and I think it will be very hard to get employees to stop using tools that actually work well and make their jobs easier,” the exec said.

Felix Simon, a research fellow in AI and news at Oxford University who studies the implications of AI for journalism, told Digiday it all boils down to what journalists are using the technology for.

“Not all non-approved AI must be dangerous,” Simon said. For example, if an employee downloads a large language model and uses it locally, that would not necessarily be a security risk, he said.

However, using a non-approved system connected to the internet would be “more problematic if you feed it with sensitive data,” he added.

The best approach, according to the publishing exec, is to explain these risks “in a realistic way that also includes risks to them personally.”

To mitigate the pittfalls associated with generative AI use, 64% of organizations plan to improve employee education and 57% will introduce new policies on AI usage this year, according to Trint’s report.

The New York Times approved the use of some AI tools for its editorial and product teams two weeks ago, Semafor reported. The company outlined what editorial staff can and can’t do with the technology — and noted that using some unapproved AI tools could leave sources and information unprotected.

More in Media

Why more brands are rethinking influencer marketing with gamified micro-creator programs

Brands like Urban Outfitters and American Eagle are embracing a new, micro-creator-focused approach to influencer marketing. Why now?

WTF is pay per ‘demonstrated’ value in AI content licensing?

Publishers and tech companies are developing a “pay by demonstrated value” model in AI content licensing that ties compensation to usage.

The case for and against publisher content marketplaces

The debate isn’t whether publishers want marketplaces. It’s whether the economics support them.