Secure your place at the Digiday Media Buying Summit in Nashville, March 2-4

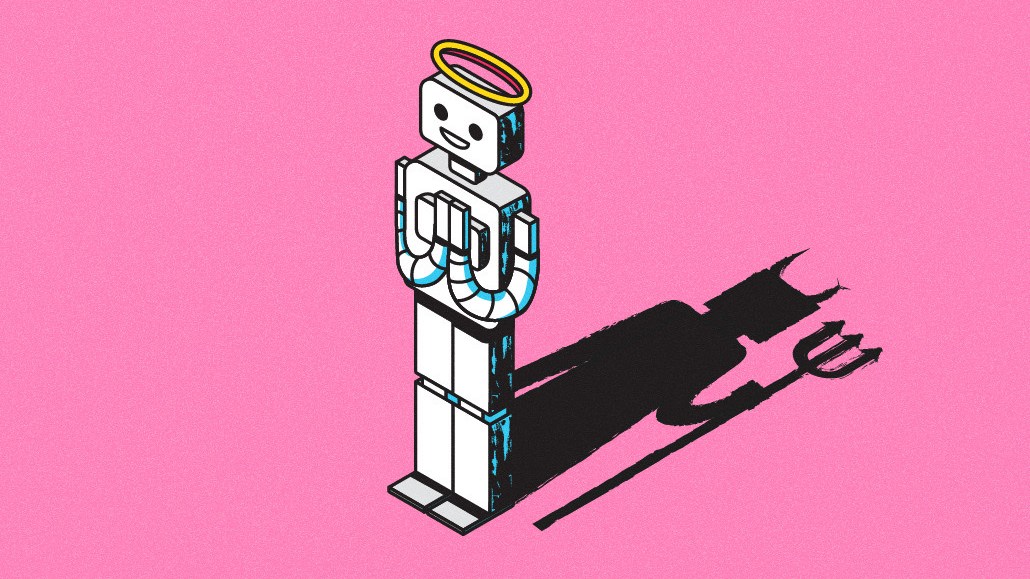

As AI attention builds, so does the tension with how to handle it

The backlash was bound to happen.

After months of tech companies racing to roll out various artificial intelligence tools, the sector now finds itself coming under increased scrutiny. But that doesn’t seem to be stopping anyone from building it — or slowing down many from buying it.

Last week, tech experts and regulators alike took public aim at the burgeoning AI industry. European Union officials introduced new legislation to regulate AI, the British government rolled out a white paper laying out a “pro-innovation approach” to AI and Italy became the first government to ban OpenAI’s ChatGPT due to data privacy concerns.

In the U.S., the Center for AI and Digital Policy, an AI think tank, petitioned the Federal Trade Commission to get involved. And in a separate public letter signed by more than 1,000 tech leaders — including Apple Co-Founder Steve Wozniak, Pinterest Co-Founder Evan Sharp and numerous AI experts — urged companies themselves to “pause” further development of AI models for at least six months before training an AI system more powerful than OpenAI’s recently released GPT-4.

That concerns don’t appear to be stopping companies building AI from forging ahead with their own updates. Amid last week’s calls for a slowdown, Microsoft teased out new ads for its ChatGPT-powered Bing, Adobe touted a new partnership with Prudential Insurance to use AI for “personalized financial experiences,” and Google revealed a new deal with the coding platform Replit to further scale AI software.

The current lack of regulatory clarity around various concerns — such as data ownership and intellectual property rights — poses risks to using ChatGPT, according to Gartner senior analyst Nicole Greene. She said governments and businesses need to collaborate to “help society shape expectations,” but also warned that preemptively regulating the sector might also end up hindering research and advancements.

“[Marketers] urgently need to compile a list of active use cases impacted by generative AI and collaborate with peers to establish policies and practical guidelines to steer its responsible use,” Greene said. “New vendors are appearing every day and organizations need to understand both their primary use cases, but ensure that they have the necessary transparency, trust and security standards to apply these technologies in support of brand efforts.”

According to the 2023 edition of Stanford University’s “AI Index Report” — which tracks AI’s progress, perceptions and impact around the world — incidents of misuse of AI have increased 26x in the past decade. The in-depth report published this week also found that the number of bills passed into law around the world mentioning “artificial intelligence” increased from 1 in 2016 to 37 in 2022. And perhaps somewhat surprisingly, the report also mentions corporate investment in AI decreased last year for the first time, dropping from $276.1 billion in 2021 to $189.6 billion in 2022

Beyond the giants, the founders of some AI-focused marketing-tech startups insist there’s a way to develop AI safely despite ongoing concerns.

Among the optimists is Hikari Senju, CEO and founder of Omneky, an advertising platform using AI to generate social media ads and product images. He called warnings of AI taking over humanity “luddite’s thinking,” adding that he doesn’t think a “singular platform will outsmart the collective wisdom of humanity.” Although he favors regulation that protects people from the potential dangers of AI, Senju doesn’t believe humans are at risk anytime soon.

“The question really is, how can AI further empower humanity to better communicate with each other and actually make the hundred billion trillion neurons of the human collective brain level up in terms of its bandwidth and its communication?” Senju asked. “That’s really where the potential is in terms of this technology.”

Despite Senju’s characterization of them as luddites, AI experts calling for a slowdown aren’t necessarily hiding behind their flip phones. Many are AI pioneers that have spent decades researching and developing models for various applications. However, the letter, published by the Future of Life Insitute — a nonprofit backed by Elon Musk — also faced criticism of its own after observers noticed fake signatures and even some experts that actually signed it later disagreed with the organization’s approach.

Rather than a total pause, others emphasize the need for more education to train more people around the world how to responsibly develop AI, as well as to teach government officials tasked with regulating them. Bharat Krish, the former chief technology officer of Time, said there’s always “going to be good and bad, and hopefully the good wins out over the bad.”

“The genie’s out of the bottle,” said Krish, who is now an advisor for AI startup Fusemachines. “I don’t think you can really stop it. I think something like signing a letter is futile, I don’t know what it accomplishes. Because if it’s not OpenAI, there are a number of others working on it including companies that we haven’t heard about yet.”

Yair Adato, co-founder and CEO of Bria, a generative image and video startup, said the key is to think about limitations from the beginning such as using “responsibly” sourced content. Rather than train Bria’s AI with questionable content, he said feeding AI a healthy diet of knowledge and inputs from the beginning helps solve problems around copyright concerns, data privacy, bias and brand safety.

When he was raising money from investors three years ago, Adato said he had to choose whether to go into the business of generating content or detecting it. By choosing the former, he decided it was important to provide an alternative to open source models, but also added that the business model was better.

“If you think about it to start with, then you don’t need a guideline,” Adato said. “If you don’t put porn in it, then you don’t need to avoid it since the system doesn’t know what it is and you don’t need a guardrail.”

More in Media

Media Briefing: Turning scraped content into paid assets — Amazon and Microsoft build AI marketplaces

Amazon plans an AI content marketplace to join Microsoft’s efforts and pay publishers — but it relies on AI com stop scraping for free.

Overheard at the Digiday AI Marketing Strategies event

Marketers, brands, and tech companies chat in-person at Digiday’s AI Marketing Strategies event about internal friction, how best to use AI tools, and more.

Digiday+ Research: Dow Jones, Business Insider and other publishers on AI-driven search

This report explores how publishers are navigating search as AI reshapes how people access information and how publishers monetize content.