Secure your place at the Digiday Publishing Summit in Vail, March 23-25

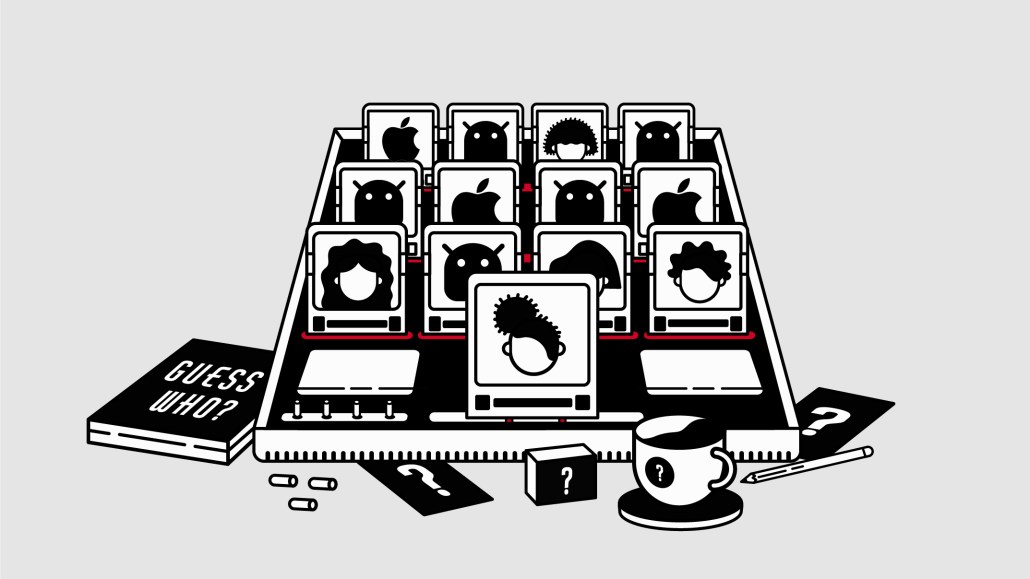

Creator platforms emerge as a front in misinformation battles

The deplatforming of Donald Trump that began on Twitter has touched off profound conversations about the role the largest tech platforms play in public conversations.

It was also the first of several dominoes to fall last week as other platforms tamped down on content and goods associated with the people who stormed the U.S. Capitol on Jan. 6, as platforms suddenly found themselves assessing hate speech and disinformation in a new way.

This past week, Patreon began expanding the list of keywords its automated content monitoring tools and review teams use to look for content that violates its policies, the second time it has changed its approach to handling unacceptable content in just the past three months; Patreon banned a number of accounts focused on the QAnon conspiracy theory back in October as well as suspending and warning several others.

“We do not want [Patreon] to be a home for creators who are inciting violence,” said Laurent Crenshaw, Patreon’s head of policy. “We’re going to put the person power and the thought energy into making sure we’re eliminating and mitigating these in the future.”

Patreon also put several user accounts under suspension last week and is considering banning them from its platform, and it is examining several others to determine if they should be suspended. Patreon has analyzed hundreds of accounts since it instituted its QAnon policy, a “small number” of the more than 100,000 creators that use Patreon’s platform, a spokesperson said. And most of them are small earners, generating a few hundred dollars per month from patrons.

But they are part of a much larger reckoning the internet is having with dangerous, hateful and harmful speech.

“More and more companies are going to have to invest resources into developing not just community policies but answer questions like, ‘What is misinformation on our platform,’ and ‘How are we going to regulate it?’” said Natascha Chtena, editor in chief of the Harvard Kennedy School’s Misinformation Review. “That takes a lot of resources.”

A platform like Patreon is, almost by design, hoping to welcome and support a broad array of viewpoints and speech, Crenshaw said.

That support of speech disappears when it “reaches the point of real-world harm,” a threshold that the country crossed quite suddenly on Jan. 6. Misinformation about things like the legitimacy of the recent presidential election, for example, content that might have been regarded as merely objectionable two weeks ago now looks dangerous.

“Some of these people we’re talking about, the Ali Alexanders of the world, were seen as being as controversial until last week,” Crenshaw said, referring to one person whose Patreon account is under review (Crenshaw spoke to Digiday Jan. 13).

All of these platforms, broadly speaking, have similar views about what kinds of speech are tolerable, though the level of specificity in their policies varies. Medium, for example, specifically identifies conspiracy theories, as well as scientific and historical misinformation, as categories of speech it does not allow; Substack’s content policy bans content that promotes “harmful or illegal activities” or “violence, exclusion or segregation based on protected classes.”

But how far each decides to go to in monitoring this varies as well. Patreon monitors content produced by its creators beyond what’s hosted directly on its platform, including other social platforms and even what those creators do in the real world when deciding whether an account should be suspended or removed, Crenshaw said. It uses a mixture of automated tools, which look for keywords, as well as an internal team of reviewers, who look at accounts and content more closely, examining not just the content they produce and host through Patreon but things those creators say and do on other platforms.

By contrast, Substack takes a much more hands off approach, viewing the relationships it facilitates as more open-ended. “With Substack, readers choose what they see,” the site’s cofounders wrote in a blog post published late last year. “A reader makes a conscious decision about which writers to invite into their inboxes, and which ones to support with money.”

Substack and Medium both declined to comment for this story.

Which approach is right may be in the eye of the beholder, and overly restrictive approaches can have negative consequences. The tech and media journalist Simon Owens moved his own newsletter from Tinyletter to Substack in 2019 in part because of Tinyletter’s overly restrictive moderation filters. “If you used a single word that this filter deemed as problematic or spammy, then Tinyletter would lock down your ability to send out the newsletter until a human staff member could review it and unlock your account,” Owens wrote this summer.

How each platform handles these kinds of speech on their platforms could have implications on their business. In a short period of time, all three companies have built strong brands by providing the foundation for several successful media entrepreneurs.

But if Substack or Patreon were to become known as the platform that supports newsletters that question the legitimacy of the 2020 presidential election results, for example, or newsletters that posit that president-elect Joe Biden is a child molester, what might that do to the halo that now comes with having a presence on those platforms?

Those connections have not yet been made in the popular imagination, and as long as each platform maintains a hands-off approach, they might not ever form.

“If I found out tomorrow that [Substack] gave Milo Yiannopoulos a big grant the way they did with Matthew Yglesias, for example, that might give me pause,” Owens said in a separate interview. “But I don’t see anything there now that makes me rethink things.”

The person who galvanized the spread of so much misinformation, both directly and indirectly, over the past four years, is just days away from becoming a private citizen whose communications reach will be much curtailed.

But the problem he unleashed isn’t going away, and some, including Crenshaw, are welcoming the idea of different platforms working together on standards to combat it.

“It’s actually shocking that different platforms have not yet had these conversations in an organized matter,” Chtena said. “I think it is a challenge for smaller companies, and that needs to be recognized.”

More in Media

Media Briefing: As AI search grows, a cottage industry of GEO vendors is booming

A wave of new GEO vendors promises improving visibility in AI-generated search, though some question how effective the services really are.

‘Not a big part of the work’: Meta’s LLM bet has yet to touch its core ads business

Meta knows LLMs could transform its ads business. Getting there is another matter.

How creator talent agencies are evolving into multi-platform operators

The legacy agency model is being re-built from the ground up to better serve the maturing creator economy – here’s what that looks like.