Elizabeth Lee is global strategist at creative marketing agency gyro

What do Donald Trump’s hair, Caillou and Mein Kampf have in common? They all reveal fissures in the fabric of the algorithms that run our world.

Algorithms are everywhere. From the flash crash to filter bubbles, retargeting, recommendations and even the news we read, the air is full of dire predictions of their effects.

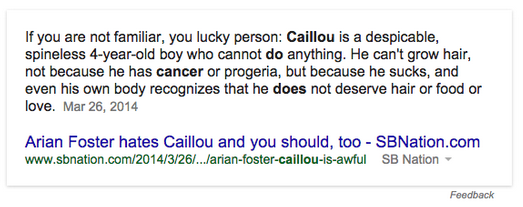

But those effects are largely hidden from us, revealed only by the sometimes funny (and often disturbing) mistakes that result from computer logic. For example, if you type the name of Canadian children’s show character “Caillou” into Google’s Direct Answer algorithm, you get a rather unexpected result:

Poor Caillou is fictional, and can take the heat. But often, more pernicious effects are seen from rogue algorithms.

This past month, Google user Jacky Alciné tweeted that the company’s photo app kept tagging photos of him and his girlfriend as “gorillas.” Alciné is African American.

And people weren’t laughing when Gawker tricked Coca-Cola’s #makeithappy algorithm to re-tweet the entire text of Mein Kampf. Or when Facebook’s real names algorithm kept prompting Native Americans to change their names.

When blogger Eric Mayer received targeted ads from Facebook’s “Year In Review” app that his 6-year-old daughter who had died earlier that year, he wrote, “This algorithmic cruelty is the result of code that works in the majority of cases … but for those of us who have lived any one of a hundred crises, we might not want a look.”

Researchers Christian Sandvig and Karrie Karahalios have studied how hard it is to predict what an algorithm will do in the wild. Sandvig ties algorithmic fiascoes to several causes: the cultural bias of their creators, shortsighted design, or machine learning that reveals uncomfortable yet true prejudices.

Often, the general public doesn’t even notice algorithms at work. Karahalios’s research on Facebook found that only 37.5 percent of people were aware that an algorithm determined their newsfeed content. When they found out, reactions were visceral. Some felt betrayed at the thought that content from close friends and family was being “hidden” from them. Researchers at Harvard Business School classify these reactions under “algorithm aversion.”

But algorithms aren’t going away, and according to many in the field, attempting to promote widespread “algorithm literacy” is a doomed task. It would be more efficient to nominate a team of experts to regulate them.

This proves problematic when you consider how such an approach centralizes responsibility. Specifically, what happens when those “experts” aren’t trained to take morality or their own biases into account? Will we get another mortgage interest rate calculator that discriminates based on ethnicity and gender?

“Even if they are not designed with the intent of discriminating against those groups, if they reproduce social preferences even in a completely rational way, they also reproduce those forms of discrimination,” said David Oppenheimer, professor of discrimination law at U.C. Berkeley in a recent New York Times article.

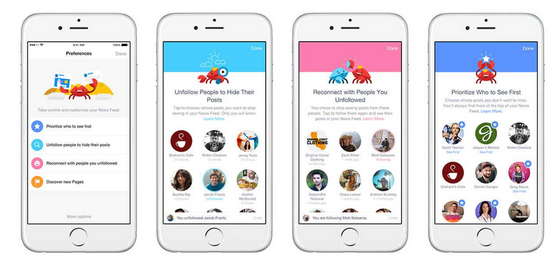

For designers, Karahalious’s research contains a grain of hope that there’s another way. Once the initial shock of discovering the algorithm wore off, people actually became more engaged, felt more empowered and spent more time on the social network. Karahalious suggests, “If by giving people a hint, a seam, a crack as to an algorithm being there, we can engage people more, maybe we should start designing with more algorithm awareness.”

A paper by Wharton researchers supports this idea, proving that people are more willing to trust and use algorithms if they are allowed to tweak their output a little bit.

Companies are starting to experiment with doing just that. Last week Facebook updated its iOS app to give people more transparent control over their newsfeed experience.

Others are blending human and machine to try to get the benefits of algorithmic efficiency without the gaffes. Twitter recently revealed the plans for its “project lightning,” a feed of tweets that users can tune into. A team of editors in Twitter’s major markets will use data tools to extract emerging trends, but will ultimately make the call about what tweets will be included.

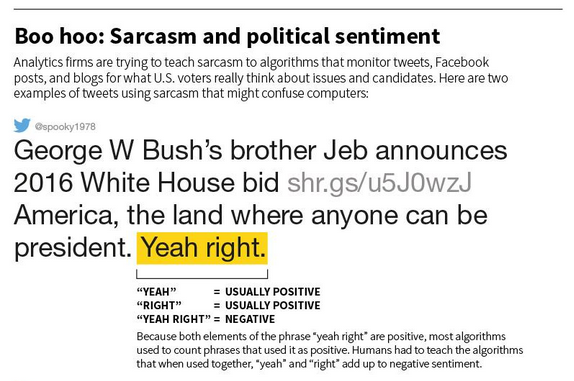

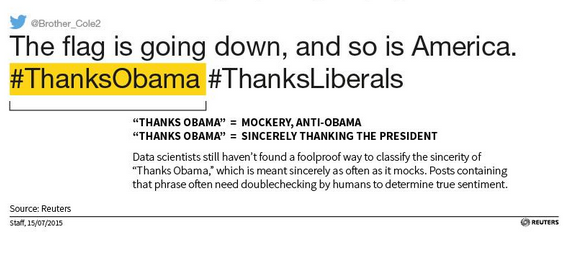

Meanwhile, social media analytics firms are trying to use human insight to adapt their algorithms’ ability to parse the most difficult content: sarcasm and mockery. Mohammed Hamid, CTO of Two.42.solutions, says he’s had to teach his computer system that when the normally neutral term “hair” is mentioned in a tweet about the famously coiffed Donald Trump, the tweet is actually negative.

Whether the hybrid algorithm-human model will work, or will further exacerbate existing biases, remains to be seen. In fact, the Wharton research suggests that algorithms get “worse” when humans intervene.

Right now there are few safeguards in place to promote responsible algorithm creation – and the reported lack of diversity in Silicon Valley companies – along with a cultural attitude embodied by the adage, “It’s better to ask for forgiveness than permission” – seems to suggest the problem isn’t about to be solved. But we have a responsibility to keep asking – a string of code isn’t ashamed when we all laugh at its mistakes – but hopefully, its creators will be.

Trump image courtesy of Andrew Cline / Shutterstock.com

More in Media

Walmart rolls out a self-serve, supplier-driven insights connector

The retail giant paired its insights unit Luminate with Walmart Connect to help suppliers optimize for customer consumption, just in time for the holidays, explained the company’s CRO Seth Dallaire.

Research Briefing: BuzzFeed pivots business to AI media and tech as publishers increase use of AI

In this week’s Digiday+ Research Briefing, we examine BuzzFeed’s plans to pivot the business to an AI-driven tech and media company, how marketers’ use of X and ad spending has dropped dramatically, and how agency executives are fed up with Meta’s ad platform bugs and overcharges, as seen in recent data from Digiday+ Research.

Media Briefing: Q1 is done and publishers’ ad revenue is doing ‘fine’

Despite the hope that 2024 would be a turning point for publishers’ advertising businesses, the first quarter of the year proved to be a mixed bag, according to three publishers.